("tasks", {

allowApiCrud: Allow.authenticated,

allowApiInsert: "admin",

allowApiDelete: "admin"

})

export class Task {

@Fields.id()

id!: string

@Fields.string({

validate: (task) => {

if (task.title.length < 3) throw "Too Short"

}

allowApiUpdate: "admin"

})

title = ""

@Fields.boolean()

completed = false

}

```

2. Let's give the user _"Jane"_ the `admin` role by modifying the `roles` array of her `validUsers` entry.

```ts{3,13}

// src/shared/AuthController.ts

const validUsers = [{ name: "Jane", admin: true }, { name: "Steve" }];

export class AuthController {

@BackendMethod({ allowed: true })

static async signIn(name: string) {

const user = validUsers.find((user) => user.name === name);

if (user) {

remult.user = {

id: user.name,

name: user.name,

roles: user.admin ? ["admin"] : [],

};

remult.context.request!.session!["user"] = remult.user;

return remult.user;

} else {

throw Error("Invalid user, try 'Steve' or 'Jane'");

}

}

```

**Sign in to the app as _"Steve"_ to test that the actions restricted to `admin` users are not allowed. :lock:**

## Role-based Authorization on the Frontend

From a user experience perspective it only makes sense that users that can't add or delete, would not see these buttons.

Let's reuse the same definitions on the Frontend.

We'll use the entity's metadata to only show the form if the user is allowed to insert

```vue{8,23}

// src/App.vue

```

This way we can keep the frontend consistent with the `api`'s Authorization rules

- Note We send the `task` to the `apiDeleteAllowed` method, because the `apiDeleteAllowed` option, can be sophisticated and can also be based on the specific item's values.

# vue - Tutorial - Database

# Database

Up until now the todo app has been using a plain JSON file to store the list of tasks. **In production, we'd like to use a `Postgres` database table instead.**

::: tip Learn more

See the [Quickstart](https://remult.dev/docs/quickstart.html#connecting-a-database) article for the (long) list of relational and non-relational databases Remult supports.

:::

::: warning Don't have Postgres installed? Don't have to.

Don't worry if you don't have Postgres installed locally. In the next step of the tutorial, we'll configure the app to use Postgres in production, and keep using JSON files in our dev environment.

**Simply install `postgres-node` per step 1 below and move on to the [Deployment section of the tutorial](deployment.md).**

:::

1. Install `postgres-node` ("pg").

```sh

npm i pg

```

2. Add the highlighted code to the `api` server module.

```ts{5,9-11}

// src/server/api.ts

//...

import { createPostgresDataProvider } from "remult/postgres"

export const api = remultApi({

//...

dataProvider: createPostgresDataProvider({

connectionString: "your connection string"

})

})

```

# vue - Tutorial - Deployment

# Deployment

Let's deploy the todo app to [railway.app](https://railway.app/).

## Prepare for Production

In this tutorial, we'll deploy both the Vue app and the API server as [one server-side app](https://create-react-app.dev/docs/deployment/#other-solutions), and redirect all non-API requests to return the Vue app.

We will deploy an ESM node server project

In addition, to follow a few basic production best practices, we'll use [compression](https://www.npmjs.com/package/compression) middleware to improve performance and [helmet](https://www.npmjs.com/package/helmet) middleware for security

1. Add the highlighted code lines to `src/server/index.ts`, and modify the `app.listen` function's `port` argument to prefer a port number provided by the production host's `PORT` environment variable.

```ts{16-21}

// src/server/index.ts

import express from "express"

import { api } from "./api.js"

import session from "cookie-session"

import { auth } from "./auth.js"

const app = express()

app.use(

session({

secret: process.env["SESSION_SECRET"] || "my secret"

})

)

app.use(auth)

app.use(api)

const frontendFiles = process.cwd() + "/dist";

app.use(express.static(frontendFiles));

app.get("/*", (_, res) => {

res.sendFile(frontendFiles + "/index.html");

});

app.listen(process.env["PORT"] || 3002, () => console.log("Server started"));

```

3. Modify the highlighted code in the api server module to prefer a `connectionString` provided by the production host's `DATABASE_URL` environment variable.

```ts{4,7-9}

// src/server/api.ts

//...

const DATABASE_URL = process.env["DATABASE_URL"];

export const api = remultApi({

dataProvider: DATABASE_URL

? createPostgresDataProvider({ connectionString: DATABASE_URL })

: undefined,

//...

})

```

::: warning Note

In order to connect to a local PostgresDB, add `DATABASE_URL` to an .env file, or simply replace `process.env["DATABASE_URL"]` with your `connectionString`.

If no `DATABASE_URL` has found, it'll fallback to our local JSON files.

:::

4. Modify the project's `build` npm script to additionally transpile the API server's TypeScript code to JavaScript (using `tsc`).

```json

// package.json

"build": "run-p type-check \"build-only {@}\" -- && tsc -p tsconfig.server.json"

```

5. Add `start` npm script to start the production Node.js server.

```json

// package.json

"start": "node dist/server/"

```

The todo app is now ready for deployment to production.

## Test Locally

To test the application locally run

```sh

npm run build

npm run start

```

::: warning Build Errors

If you get an error `error TS5096: Option 'allowImportingTsExtensions' can only be used when either 'noEmit' or 'emitDeclarationOnly' is set.` do not set the `emitDeclarationOnly` flag!

You are getting the error because somewhere in your code you've imported from `.ts` instead of `.js` - fix it and build again

:::

Now navigate to http://localhost:3002 and test the application locally

## Deploy to Railway

In order to deploy the todo app to [railway](https://railway.app/) you'll need a `railway` account. You'll also need [Railway CLI](https://docs.railway.app/develop/cli#npm) installed, and you'll need to login to railway from the cli, using `railway login`.

Click enter multiple times to answer all its questions with the default answer

1. Create a Railway `project`.

From the terminal in your project folder run:

```sh

railway init

```

2. Set a project name.

3. Once that's done run the following command to open the project on railway.dev:

```sh

railway open

```

4. Once that's done run the following command to upload the project to railway:

```sh

railway up

```

5. Add Postgres Database:

1. In the project on `railway.dev`, click `+ Create`

2. Select `Database`

3. Select `Add PostgresSQL`

6. Configure the environment variables

1. Click on the project card (not the Postgres one)

2. Switch to the `variables` tab

3. Click on `+ New Variable`, and in the `VARIABLE_NAME` click `Add Reference` and select `DATABASE_URL`

4. Add another variable called `SESSION_SECRET` and set it to a random string, you can use an [online UUID generator](https://www.uuidgenerator.net/)

5. Switch to the `settings` tab

6. Under `Environment` click on `Generate Domain`

7. Click on the `Deploy` button on the top left.

7. Once the deployment is complete -

8. Click on the newly generated url to open the app in the browser and you'll see the app live in production. (it may take a few minutes to go live)

::: warning Note

If you run into trouble deploying the app to Railway, try using Railway's [documentation](https://docs.railway.app/deploy/deployments).

:::

That's it - our application is deployed to production, play with it and enjoy.

To see a larger more complex code base, visit our [CRM example project](https://www.github.com/remult/crm-demo)

Love Remult? Give our repo a star.⭐

# SvelteKit - Tutorial - Setup

# Build a Full-Stack SvelteKit Application

### Create a simple todo app with Remult using a SvelteKit

In this tutorial, we are going to create a simple app to manage a task list. We'll use `SvelteKit` for the UI & the backend and Remult as our full-stack CRUD framework.

By the end of the tutorial, you should have a basic understanding of Remult and how to use it to accelerate and simplify full stack app development.

::: tip You want to have a look at the end result ?

You can `degit` the final result and read the `README.md` file in the project to check it out.

```sh

npx degit remult/remult/examples/sveltekit-todo remult-sveltekit-todo

cd remult-sveltekit-todo

```

:::

### Prerequisites

This tutorial assumes you are familiar with `SvelteKit`.

Before you begin, make sure you have [Node.js](https://nodejs.org) and [git](https://git-scm.com/) installed.

# Setup for the Tutorial

This tutorial requires setting up a Sveltekit project, and a few lines of code to add Remult.

## Step-by-step Setup

### Create a Sveltekit project

Create the new Sveltekit project.

```sh

npx sv@latest create remult-sveltekit-todo

```

The command prompts you for information about features to include in the initial app project. Here are the answers used in this tutorial:

1. **Which Svelte app template?**: ... `minimal` Project

2. **Add type checking with TypeScript?** ... Yes, using `TypeScript` syntax

3. **Select additional options**: ... We didn't select anything for this tutorial. Feel free to adapt it to your needs.

4. **Which package manager?**: ... We took `npm`, if you perfer others, feel free.

Once completed, change to the app directory:

```sh

cd remult-sveltekit-todo

```

### Install required packages and Remult

```sh

npm i remult --save-dev

```

### Bootstrap Remult

1. Open your IDE.

2. Create your remult `api`

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

export const api = remultApi({})

```

:::

3. Create a remult `api route`

::: code-group

```ts [src/routes/api/[...remult]/+server.ts]

import { api } from '../../../server/api'

export const { GET, POST, PUT, DELETE } = api

```

:::

### Final tweaks

Our full stack starter project is almost ready.

Remult makes use of decorators to enhance regular Typescript classes into entities. Add the following entry to the `compilerOptions` section of the `tsconfig.json` file to enable the use of decorators.

::: code-group

```json [tsconfig.json]

{

"compilerOptions": {

"experimentalDecorators": true // [!code ++]

}

}

```

:::

### Run the app

Open a terminal and start the vite dev server.

```sh

npm run dev

```

The default "Sveltekit" app main screen should be available at the default Vite dev server address http://localhost:5173.

### Setup completed

At this point, our starter project is up and running. We are now ready to move to the [next step of the tutorial](./entities.md) and start creating the task list app.

# SvelteKit - Tutorial - Entities

# Entities

Let's start coding the app by defining the `Task` entity class.

The `Task` entity class will be used:

- As a model class for client-side code

- As a model class for server-side code

- By `remult` to generate API endpoints, API queries, and database commands

The `Task` entity class we're creating will have an auto-generated `id` field, a `title` field, a `completed` field and an auto-generated `createdAt` field. The entity's API route ("tasks") will include endpoints for all `CRUD` operations.

## Define the Model

1. Create a `shared` folder under the `src` folder. This folder will contain code shared between the frontend and the backend.

2. Create a file `Task.ts` in the `src/shared/` folder, with the following code:

::: code-group

```ts [src/shared/Task.ts]

import { Entity, Fields } from 'remult'

@Entity('tasks', {

allowApiCrud: true,

})

export class Task {

@Fields.id()

id!: string

@Fields.string()

title: string = ''

@Fields.boolean()

completed: boolean = false

@Fields.createdAt()

createdAt?: Date

}

```

:::

The [@Entity](../../docs/ref_entity.md) decorator tells Remult that this class is an entity class. The decorator accepts a `key` argument (used to name the API route and as the default database collection/table name), and an optional `options` object of type `EntityOptions`. This is used to define entity-related properties and operations, discussed in the next sections of this tutorial.

Initially, we are going to allow all CRUD operations on tasks, by setting the option [allowApiCrud](../../docs/ref_entity.md#allowapicrud) to `true`.

The [@Fields.id](../../docs/field-types.md#fields-id) decorator tells Remult to automatically generate a unique id using `crypto.randomUUID()`. This value can't be changed after the entity is created.

The [@Fields.string](../../docs/field-types.md#fields-string) decorator tells Remult the `title` property is an entity data field of type `String`. This decorator is also used to define field-related properties and operations, discussed in the next sections of this tutorial and the same goes for `@Fields.boolean` and the `completed` property.

The [@Fields.createdAt](../../docs/field-types.md#fields-createdat) decorator tells Remult to automatically generate a `createdAt` field with the current date and time.

::: tip

For a complete list of supported field types, see the [Field Types](../../docs/field-types.md) section in the Remult documentation.

:::

3. Register the `Task` entity with Remult by adding `entities: [Task]` to the `options` object that is passed to the remult hook:

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

import { Task } from '../shared/Task' // [!code ++]

export const api = remultApi({

entities: [Task], // [!code ++]

})

```

:::

## Test the API

Now that the `Task` entity is defined, we can start using the REST API to query and add tasks. By default Remult exposes the `/api/` endpoint. Resources (entities) can then be accessed by appending the entity's `key` -- _tasks_ in this case.

1. Open a browser with the url: [http://localhost:5173/api/tasks](http://localhost:5173/api/tasks), and you'll see that you get an empty array.

2. Use `curl` to `POST` a new task - _Clean car_. If you prefer, you can use a graphical tool such as Postman, Insomnia or Thunder Client.

```sh

curl http://localhost:5173/api/tasks -d "{\"title\": \"Clean car\"}" -H "Content-Type: application/json"

```

3. Refresh the browser for the url: [http://localhost:5173/api/tasks](http://localhost:5173/api/tasks) and notice that the array now contains one item.

4. The `POST` endpoint can accept a single `Task` or an array of `Task`s. Add a few more tasks:

```sh

curl http://localhost:5173/api/tasks -d "[{\"title\": \"Read a book\"},{\"title\": \"Take a nap\", \"completed\":true },{\"title\": \"Pay bills\"},{\"title\": \"Do laundry\"}]" -H "Content-Type: application/json"

```

5. Refresh the browser again, to see that the new tasks were stored in the db.

::: warning Wait, where is the backend database?

While remult supports [many relational and non-relational databases](https://remult.dev/docs/installation/database/), in this tutorial we start off by storing entity data in a backend **JSON file**. Notice that a `db` folder has been created under the root folder, with a `tasks.json` file containing the created tasks.

:::

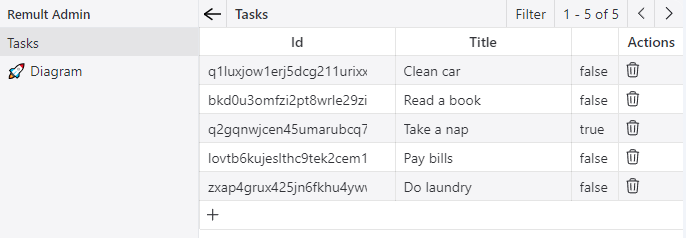

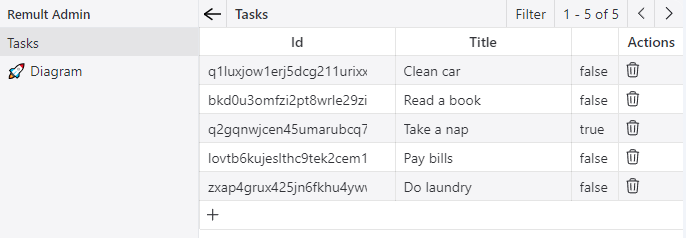

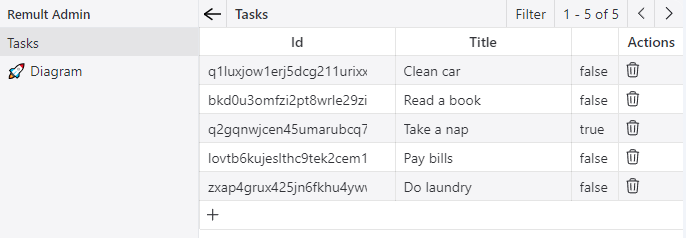

## Admin UI

### Enabling the Admin UI

Add the Admin UI to your Sveltekit application by setting the `admin` option to `true` in the `remultApi()`

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

import { Task } from '../shared/Task'

export const api = remultApi({

entities: [Task],

admin: true, // Enable the Admin UI

})

```

:::

### Accessing and Using the Admin UI

Navigate to `http://localhost:5173/api/admin` to access the Admin UI. Here, you can perform CRUD operations on your entities, view their relationships via the Diagram entry, and ensure secure management with the same validations and authorizations as your application.

### Features

- **CRUD Operations**: Directly create, update, and delete tasks through the Admin UI.

- **Entity Diagram**: Visualize relationships between entities for better data structure understanding.

- **Security**: Operations are secure, adhering to application-defined rules.

## Display the Task List

Let's start developing the web app by displaying the list of existing tasks.

Let's do it simply in the root of the app by adding this code in `+page.svelte`:

::: code-group

```svelte [src/routes/+page.svelte]

```

:::

Here's a quick overview of the different parts of the code snippet:

- ` remult.repo(Task)` is a Remult [Repository](../../docs/ref_repository.md) object used to fetch and create Task entity objects.

- `$effect` is used to call the Remult [repository](../../docs/ref_repository.md)'s [find](../../docs/ref_repository.md#find) method to fetch tasks from the server, once when the component is loaded.

After the browser refreshes, the list of tasks appears.

### Styling the Output

Remult is un-opinionated in as far as front-end styling is concerned. To demonstrate, let's style our app using vanilla CSS.

Simply create these 2 files:

::: code-group

```svelte [src/routes/+layout.svelte]

Remult+Sveltekit Todo App

{@render children?.()}

```

```css [src/app.css]

@charset "utf-8";

body {

font-family: Arial;

background-color: whitesmoke;

justify-content: center;

margin: 0;

}

h1 {

color: #ef4444;

font-style: italic;

font-size: 3.75rem;

font-weight: inherit;

text-align: center;

}

main {

max-width: 500px;

min-width: 300px;

margin: auto;

background-color: white;

box-sizing: border-box;

border: 1px solid lightgray;

border-radius: 0.5rem;

box-shadow:

0 2px 4px #0003,

0 25px 50px #0000001a;

}

main > div,

main > form {

padding: 0.5rem 1rem;

border-bottom: 1px solid lightgray;

display: flex;

align-items: center;

gap: 0.25rem;

justify-content: space-between;

}

main > div:has(input[type='checkbox']) {

justify-content: inherit;

}

input {

font-family: inherit;

font-size: 100%;

width: 100%;

border: 0;

padding: 0.5rem;

}

input:checked + input,

input:checked + span {

text-decoration: line-through;

}

input:placeholder-shown {

font-style: italic;

}

input[type='checkbox'] {

width: 36px;

height: 36px;

height: 1.5rem;

}

button {

cursor: pointer;

padding: 0.5rem 0.5rem;

background-color: white;

font-family: inherit;

font-size: 85%;

line-height: inherit;

border: 2px solid #0000001a;

border-radius: 0.5rem;

}

```

:::

...and voila!, our app should look much better!! Feel free to improve or substitute the styling as you deem fit.

::: tip

The styles imported into `src/routes/+layout.svelte` will apply to all pages in the app - unless explicitly overriden.

:::

# SvelteKit - Tutorial - Paging, Sorting and Filtering

# Paging, Sorting and Filtering

The RESTful API created by Remult supports **server-side paging, sorting, and filtering**. Let's use that to limit, sort and filter the list of tasks.

## Limit Number of Fetched Tasks

Since our database may eventually contain a lot of tasks, it make sense to use a **paging strategy** to limit the number of tasks retrieved in a single fetch from the back-end database.

Let's limit the number of fetched tasks to `20`.

To do so, simply pass a `limit` option to the `find` method call:

::: code-group

```svelte [src/routes/+page.svelte]

$effect(() => {

repo(Task)

.find(

{ limit: 20 } // [!code ++]

)

.then((t) => (tasks = t));

});

```

:::

Depending on the number of tasks that you have added, you may not have enough tasks in the database for this change to have an immediate visible effect, but it will have one later on when we add more tasks.

::: tip

Using `limit` only returns the first page of data. To query subsequent pages, use the [Repository.find()](../../docs/ref_repository.md#find) method's `page` option.

:::

## Sorting By Creation Date

We would like old tasks to appear first in the list, and new tasks to appear last. Let's sort the tasks by their `createdAt` field.

Set the `orderBy` property of the `find` method call's `option` argument to an object that contains the fields you want to sort by.

Use "asc" and "desc" to determine the sort order.

::: code-group

```svelte [src/routes/+page.svelte]

$effect(() => {

repo(Task)

.find({

limit: 20,

orderBy: { createdAt: "asc" } // [!code ++]

})

.then((t) => (tasks = t));

});

```

:::

## Filtering

Remult supports sending filter rules to the server to query only the tasks that we need.

Adjust your function to fetch only `completed` tasks.

::: code-group

```svelte [src/routes/+page.svelte]

$effect(() => {

repo(Task)

.find({

limit: 20,

orderBy: { createdAt: "asc" },

where: { completed: true } // [!code ++]

})

.then((t) => (tasks = t));

});

```

:::

::: warning NOTE:

Because the `completed` field is of type `boolean`, the argument is **compile-time checked to be of the `boolean` type**. Settings the `completed` filter to `undefined` causes it to be ignored by Remult.

:::

Play with different filtering values, and eventually comment it out, since we do need all the tasks

```svelte [src/routes/+page.svelte] {6}

$effect(() => {

repo(Task)

.find({

limit: 20,

orderBy: { createdAt: "asc" }

// where: { completed: true }

})

.then((t) => (tasks = t));

});

```

::: tip Learn more

Explore the reference for a [comprehensive list of filtering options](../../docs/entityFilter.md).

:::

# SvelteKit - Tutorial - CRUD Operations

# CRUD Operations

## Adding new tasks

Now that we can see the list of tasks, it's time to add a few more. We create a form which executes the `addTask` function that invokes `taskRepo.insert()`. Update your `+page.svelte` as follows:

::: code-group

```svelte [src/routes/+page.svelte]

```

:::

The call to `insert` will make a post request to the server, insert the new task to the db, and return the new Task object with all it's info (including the id generated by the database)

Try adding a few tasks to see how it works.

## Mark Tasks as Completed

1. Add a `setCompleted` function in the script section as follows:

```ts

const setCompleted = async (task: Task, completed: boolean) => {

await repo(Task).save({ ...task, completed })

}

```

2. Modify the checkbox to invoke the method:

```svelte

setCompleted(task, e.currentTarget.checked)}

/>

{task.title}

```

## Rename Tasks

To make the tasks in the list updatable, we'll use an `input` element and bind it to the task's `title` property. We'll also add a _Save_ button to commit the changes to the backend database.

1. Add a `saveTask` function in the script section as follows:

```ts

const saveTask = async (e: Event, task: Task) => {

e.preventDefault()

await repo(Task).save({ ...task })

}

```

2. Update the html part

```svelte

{#each tasks as task}

setCompleted(task, e.currentTarget.checked)}

/>

{/each}

```

The `saveTask` function saves the task that is passed in. Since the task's title is bound to the `input`, changes are made directly to the task.

Make some changes and refresh the browser to verify that the backend database is updated.

::: tip Browser's Network tab

As you play with these `CRUD` capabilities, monitor the network tab and see that they are all translated to `rest` api calls.

:::

## Delete Tasks

Let's add a _Delete_ button next to the **Save** button of each task in the list.

1. Add the `deleteTask` function

```ts

const deleteTask = async (e: Event, task: Task) => {

e.preventDefault()

await repo(Task).delete(task)

tasks = tasks.filter((c) => c.id !== task.id)

}

```

2. Add the **Delete** button

```svelte

{#each tasks as task}

setCompleted(task, e.currentTarget.checked)}

/>

// [!code ++]

{/each}

```

# SvelteKit - Tutorial - Validation

# Validation

Validating user input is usually required both on the client-side and on the server-side, often causing a violation of the [DRY](https://en.wikipedia.org/wiki/Don%27t_repeat_yourself) design principle. **With Remult, validation code can be placed within the entity class, and Remult will run the validation logic on both the frontend and the relevant API requests.**

::: warning Handling validation errors

When a validation error occurs, Remult will throw an exception.

In this tutorial, we will catch these exceptions, and alert the user.

:::

## Validate the Title Field

Task titles are required. Let's add a validity check for this rule.

1. In the `Task` entity class, modify the `Fields.string` decorator for the `title` field to include an object literal argument and set the object's `validate` property to `Validators.required`.

::: code-group

```ts [src/shared/Task.ts]

import { Validators } from 'remult';

@Fields.string({

validate: Validators.required

})

title: string = '';

```

:::

2. In `+page.svelte`, sorround the `addTask` in a `try-catch` block to capture the error:

::: code-group

```svelte [src/routes/+page.svelte]

let newTaskTitle = $state("");

const addTask = async (event: Event) => {

event.preventDefault();

try {// [!code ++]

const newTask = await repo(Task).insert({ title: newTaskTitle });

tasks = [...tasks, newTask];

newTaskTitle = "";

} catch (error) {// [!code ++]

alert((error as { message: string }).message);// [!code ++]

}// [!code ++]

};

```

:::

After the browser is refreshed, try creating a new task or saving an existing one with an empty title - the "**Title: Should not be empty**" error message is displayed.

Sorround all the other functions in `try-catch` in a similar manner and notify the user accordingly.

### Implicit server-side validation

The validation code we've added is called by Remult on the server-side to validate any API calls attempting to modify the `title` field.

Try making the following `POST` http request to the `http://localhost:5173/api/tasks` endpoint, providing an invalid title.

```sh

curl -i http://localhost:5173/api/tasks -d "{\"title\": \"\"}" -H "Content-Type: application/json"

```

A HTTP **400 Bad Request** error is returned and the validation error text is included in the body:

```ts

{

modelState: { title: 'Should not be empty' },

message: 'Title: Should not be empty'

}

```

::: tip

You should probably update all your code to handle these errors gracefully with `try-catch` blocks.

:::

## Custom Validation

Remult accords you the ability to easly create your own validation rules.

The `validate` property allows an arrow function which accepts an instance of the entity to be validated. This function will be called to validate input on both front-end and back-end.

Try something like this and see what happens:

::: code-group

```ts [src/shared/Task.ts]

@Fields.string({

validate: (task) => {

if (task.title.length < 3) throw "The title must be at least 3 characters long"

}

})

title = ""

```

:::

# SvelteKit - Tutorial - Live Queries

# Live Queries

Our todo list app can have multiple users using it at the same time. However, changes made by one user are not seen by others unless they manually refresh their browser.

Let's add realtime multiplayer capabilities to this app.

## Realtime updated todo list

Let's update our component like follows _(make sure you add and remove some lines as indicated)_

::: code-group

```svelte [/src/routes/+page.svelte]

```

:::

Let's review the change:

- Instead of calling the `repository`'s `find` method we now call the `liveQuery` method to define the query, and then call its `subscribe` method to establish a subscription which will update the Tasks state in realtime.

- The `subscribe` method accepts a callback with an `info` object that has 3 members:

- `items` - an up to date list of items representing the current result - it's useful for readonly use cases.

- `applyChanges` - a method that receives an array and applies the changes to it - we send that method to the `setTasks` state function, to apply the changes to the existing `tasks` state.

- `changes` - a detailed list of changes that were received

- The `subscribe` method returns an `unsubscribe` function, by just returning in the `$effect` function we automatically unsubscribe when the component unmounts. _(You can also do it manually in a `onDestroy` hook)_

- As all relevant CRUD operations (made by all users) will **immediately update the component's state**, we removed the manual adding/removing of new Tasks to the component's state.

Open the todo app in two (or more) browser windows/tabs, make some changes in one window and notice how the others are updated in realtime.

::: tip Under the hood

The default implementation of live-queries uses HTTP Server-Sent Events (SSE) to push realtime updates to clients, and stores live-query information in-memory.

For serverless environments _(or multi servers)_, live-query updates can be pushed using integration with third-party realtime providers, such as [Ably](https://ably.com/) (or others), and live-query information can be stored to any database supported by Remult.

:::

# SvelteKit - Tutorial - Backend methods

# Backend methods

When performing operations on multiple entity objects, performance considerations may necessitate running them on the server. **With Remult, moving client-side logic to run on the server is a simple refactoring**.

## Set All Tasks as Un/complete

Let's add two buttons to the todo app: "Set all as completed" and "Set all as uncompleted".

1. Add a `setAllCompleted` async function to `+page.svelte` function component, which accepts a `completed` boolean argument and sets the value of the `completed` field of all the tasks accordingly.

::: code-group

```svelte [src/routes/+page.svelte]

```

:::

The `for` loop iterates the array of `Task` objects returned from the backend, and saves each task back to the backend with a modified value in the `completed` field.

2. Add the two buttons to the end of the `` section of the markup. Both of the buttons' `on:click` events will call the `setAllCompleted` function with the appropriate value of the `completed` argument.

::: code-group

```svelte [src/routes/+page.svelte]

```

:::

Make sure the buttons are working as expected before moving on to the next step.

## Refactor from Front-end to Back-end

With the current state of the `setAllCompleted` function, each modified task being saved pushes a `PUT` request handled separately by the server. As the number of tasks in the todo list grows, this may become a performance issue. You can verify this on the Network tab of your browser's Developer Tools.

A simple way to prevent this is to expose an API endpoint for `setAllCompleted` requests, and run the same logic on the server instead of the client.

1. Create a new `TasksController` class, in the `shared` folder, and refactor into a new, `static`, `setAllCompleted` method in the `TasksController` class, which will run on the server.

::: code-group

```ts [src/shared/TasksController.ts]

import { BackendMethod, remult } from 'remult'

import { Task } from './Task'

export class TasksController {

@BackendMethod({ allowed: true })

static async setAllCompleted(completed: boolean) {

const taskRepo = remult.repo(Task)

for (const task of await taskRepo.find()) {

await taskRepo.update(task.id, { completed })

}

}

}

```

:::

The `@BackendMethod` decorator tells Remult to expose the method as an API endpoint (`/api/setAllCompleted`) and allow CRUD operations on this end-point (`{allowed: true}` - more details to follow).

**Unlike the front-end `Remult` object, the server implementation interacts directly with the database.**

2. Register the new `TasksController` class by adding it to the `controllers` array of the `options` object passed to `remultApi()`:

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

import { Task } from '../shared/Task'

import { TasksController } from '../shared/TasksController' // [!code ++]

export const api = remultApi({

admin: true,

entities: [Task], // [!code ++]

controllers: [TasksController], // [!code ++]

})

```

:::

3.Replace the for iteration in the `setAllCompleted` function of with a call to the `setAllCompleted` method in the `TasksController`.

::: code-group

```ts [src/routes/+page.svelte]

const setAllCompleted = async (completed: boolean) => {

// for (const task of await repo(Task).find()) { // [!code --]

// await repo(Task).save({ ...task, completed });// [!code --]

// }// [!code --]

await TasksController.setAllCompleted(completed) // [!code ++]

}

```

:::

::: warning Import TasksController

Remember to add an import of `TasksController` in `+page.svelte`.

:::

::: tip Note

With Remult backend methods, argument types are compile-time checked. :thumbsup:

:::

After the browser is refreshed, the _"Set all..."_ buttons function exactly the same but now makes only a single request to the back, and is faster.

# SvelteKit - Tutorial - Authentication and Authorization

# Authentication and Authorization

Our todo app is nearly functionally complete, but it still doesn't fulfill a very basic requirement - that users should log in before they can view, create or modify tasks.

Remult provides a flexible mechanism that enables placing **code-based authorization rules** at various levels of the application's API. To maintain high code cohesion, **entity and field-level authorization code should be placed in entity classes**.

**Remult is completely unopinionated when it comes to user authentication.** You are free to use any kind of authentication mechanism. The only requirement is that you provide Remult with an object which implements the Remult `UserInfo` interface:

```ts

export interface UserInfo {

id: string

name?: string

roles?: string[]

}

```

In this tutorial, we'll use [Auth.js](https://authjs.dev/) for authentication.

## Tasks CRUD Requires Sign-in

This rule is implemented within the `Task` `@Entity` decorator, by modifying the value of the `allowApiCrud` property. This property can be set to a function that accepts a `Remult` argument and returns a `boolean` value. Let's use the `Allow.authenticated` function from Remult.

::: code-group

```ts [src/app/shared/Task.ts]

import { Allow } from 'remult'// [!code ++]

@Entity("tasks", {

allowApiCrud: Allow.authenticated // [!code ++]

})

```

After the browser refreshes, **the list of tasks disappears** and the user can no longer create new tasks.

::: details Inspect the HTTP error returned by the API using cURL

```sh

curl -i http://localhost:5173/api/tasks

```

:::

### Server-side Authorization

Open your database (`db/tasks.json`), and click on **Mark All Completed** and **Mark All Incomplete** buttons in turn. You will notice that the `completed` field is toggling.

Although client CRUD requests to `tasks` API endpoints now require a signed-in user, the API endpoint created for our `setAllCompleted` server function remains available to unauthenticated requests. Since the `allowApiCrud` rule we implemented does not affect the server-side code's ability to use the `Task` entity class for performing database CRUD operations, **the `setAllCompleted` function still works as before**.

To fix this, let's implement the same rule using the `@BackendMethod` decorator of the `setAllCompleted` method of `TasksController`.

::: code-group

```ts [src/shared/TasksController.ts]

import { Allow } from 'remult'

@BackendMethod({ allowed: Allow.authenticated })

```

:::

Try toggling the `completed` field and you will notice that we now require to be authenticated - even on the backend.

## User Authentication

Let's set-up `Auth.js` to authenticate users to our app.

### Backend setup

1. Install `auth-core` and `auth-sveltekit`:

```sh

npm i @auth/core @auth/sveltekit -D

```

2. `Auth.js` requires a "secret" - a random string used to hash tokens, sign cookies and generate cryptographic keys.

Create a file called `.env.local` at the root of the project, and set the secret `AUTH_SECRET` to a random string.

::: code-group

```bash [.env.local]

AUTH_SECRET=something-secret

```

:::tip

You can use an [online UUID generator](https://www.uuidgenerator.net/) to generate a completely random string

:::

3. In `+hooks.server.ts`, let's create two handles

- `handleAuth` to handle authentication from `Auth.js` with a list of allowed users.

- `handleRemult` to provide the remult context.

Using Sveltekit's `sequence`, we ensure the chain of handles. The results would look like this:

::: code-group

```ts [src/hooks.server.ts]

import type { Handle } from '@sveltejs/kit'

import { sequence } from '@sveltejs/kit/hooks'

import { SvelteKitAuth } from '@auth/sveltekit'

import Credentials from '@auth/sveltekit/providers/credentials'

import { api as handleRemult } from './server/api'

import type { UserInfo } from 'remult'

/**

* Users that are allowed to log in.

*/

const validUsers: UserInfo[] = [

{ id: '1', name: 'Jane', roles: ['admin'] },

{ id: '2', name: 'Steve' },

]

/**

* Handle authentication with authjs as an example

* Based on article at https://authjs.dev/reference/sveltekit

*/

export const { handle: handleAuth } = SvelteKitAuth({

trustHost: true,

providers: [

Credentials({

credentials: {

name: {

placeholder: 'Try Steve or Jane',

},

},

authorize: (info) =>

validUsers.find((user) => user.name === info?.name) || null,

}),

],

callbacks: {

session: ({ session, token }) => ({

...session,

user: validUsers.find((user) => user.id === token?.sub),

}),

},

})

export const handle = sequence(

// 1. Handle authentication

handleAuth,

// 2. Handle remult server side

handleRemult,

)

```

:::

This (very) simplistic approach use Auth.js [Credentials Provider](https://next-auth.js.org/providers/credentials) to authorize users by looking up the user's name in a predefined list of valid users.

We've configured the `session` `callback` to include the user info as part of the session data, so that Remult on the frontend will have the authorization info.

4. Finally, add `getUser` to `remultApi` to tell remult who is connected. Inside this function, you have access to `event`, where the session was set by Auth.js before.

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

import { Task } from '../shared/Task'

import { TasksController } from '../shared/TasksController'

import type { UserInfo } from 'remult' // [!code ++]

export const api = remultApi({

admin: true,

entities: [Task],

controllers: [TasksController],

getUser: async (event) => {

const auth = await event?.locals?.auth() // [!code ++]

return auth?.user as UserInfo // [!code ++]

},

})

```

:::

### Frontend setup

1. Create a new `+layout.server.ts` to update `remult.user`

::: code-group

```ts [src/routes/+layout.server.ts]

import { remult } from 'remult'

import type { LayoutServerLoad } from './$types'

import { redirect } from '@sveltejs/kit'

// will protect every route in the app

export const load = (async () => {

if (!remult.authenticated()) {

throw redirect(303, '/auth/signin')

}

return {

user: remult.user,

}

}) satisfies LayoutServerLoad

```

:::

2. In our front-end (`+layout.svelte`), update the user globally.

::: code-group

```svelte [src/routes/+layout.ts]

Remult+Sveltekit Todo App

{@render children?.()}

```

:::

The todo app now supports signing in and out, with **all access restricted to signed in users only**.

## Role-based Authorization

Usually, not all application users have the same privileges. You will notice that our `UserInfo` contains a `roles` array. Information contained in this array can be used to enforce role-based authorization.

For our todo app we need to enforce the following authorization rules:

- All signed in users can see the list of tasks.

- All signed in users can set specific tasks as `completed`.

- Only users belonging to the `admin` role can create, delete or edit the titles of tasks.

1. Modify the highlighted lines in the `Task` entity class to enforce the three authorization rules above.

::: code-group

```ts [src/shared/Task.ts]

import { Allow, Entity, Fields } from 'remult'

@Entity('tasks', {

allowApiCrud: Allow.authenticated,

allowApiInsert: 'admin',

allowApiDelete: 'admin',

})

export class Task {

@Fields.id()

id!: string

@Fields.string({

validate: (task) => {

if (task.title.length < 3)

throw 'The title must be at least 3 characters long'

},

allowApiUpdate: 'admin',

})

title: string = ''

@Fields.boolean()

completed: boolean = false

@Fields.createdAt()

completedAt: Date = new Date()

}

```

:::

In our list of users - `usersDB`; we have defined two users - Jane and Steve; with Jane being assigned an `admin` role.

**Sign in to the app alternating between _"Jane"_ and _"Steve"_ to test that the actions restricted to `admin` users are not allowed. :lock:**

## Role-based Authorization on the Frontend

From a user experience perspective it only makes sense that users that can't add or delete, would not see these buttons.

Let's reuse the same definitions on the Frontend.

We'll use the entity's metadata to only show the form if the user is allowed to insert

::: code-group

```svelte [src/routes/+page.svelte]

{#if repo(Task).metadata.apiInsertAllowed()}// [!code ++]

{/if}// [!code ++]

```

:::

And let's do the same for the `delete` button:

::: code-group

```svelte [src/routes/+page.svelte]

setCompleted(task, e.currentTarget.checked)}

/>

{#if repo(Task).metadata.apiDeleteAllowed(task)} // [!code ++]

{/if}// [!code ++]

```

:::

This way we can keep the UI consistent with the `api`'s Authorization rules

- Note We send the `task` to the `apiDeleteAllowed` method, because the `apiDeleteAllowed` option, can be sophisticated and can also be based on the specific item's values.

# SvelteKit - Tutorial - Database

# Database

Up until now the todo app has been using a plain JSON file to store the list of tasks. In production, you will often want to use a proper database. Remult supports a (long) list of relational and non-relational databases. In this tutorial, let's use `Postgres`.

::: tip Learn more

See the [Quickstart](https://remult.dev/docs/quickstart.html#connecting-a-database) article to find out more.

:::

::: warning Don't have Postgres installed? Don't have to.

Don't worry if you don't have Postgres installed locally. In the next step of the tutorial, we'll configure the app to use Postgres in production, and keep using JSON files in our dev environment.

**Simply install `postgres-node` per step 1 below and move on to the [Deployment section of the tutorial](deployment.md).**

:::

1. Install `postgres-node` ("pg").

```sh

npm i pg

```

2. Add an environment variables called DATABASE_URL and set it with your connection string:

::: code-group

```sh [.env.local]

DATABASE_URL=postgresql://username:password@host:port/dbname[?paramspec]

```

:::

3. Add a `dataProvider` to Remult's handler.

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

import { Task } from './shared/Task'

import { TasksController } from './shared/TasksController'

import { createPostgresDataProvider } from 'remult/postgres' // [!code ++]

import { DATABASE_URL } from '$env/static/private' // [!code ++]

export const api = remultApi({

entities: [Task],

controllers: [TasksController],

dataProvider: DATABASE_URL // [!code ++]

? createPostgresDataProvider({ connectionString: DATABASE_URL }) // [!code ++]

: undefined, // [!code ++]

getUser: async (event) => {

const auth = await event?.locals?.auth()

return auth?.user as UserInfo

},

})

```

:::

Once the application restarts, it'll try to use postgres as the data source for your application.

If `DATABASE_URL` is found, it'll automatically create the `tasks` table for you.

If `DATABASE_URL` is not has found, it'll just fallback to our local JSON files.

::: tip

You can also disable this automatic migration behavior. It's not part of this tutorial so if you want to learn more, follow this [link](/docs/migrations).

:::

# SvelteKit - Tutorial - Deployment

# Deployment

Let's deploy the todo app to [railway.app](https://railway.app/).

## Prepare for Production

In order to deploy to a Node.js environment, you need to change Sveltekit's adaptor to `@sveltejs/adapter-node`.

1. Install `adapter-node`:

```sh

npm i @sveltejs/adapter-node --save-dev

```

2. In `svelte.config.js`, change the adapter:

::: code-group

```js [svelte.config.js]

import adapter from '@sveltejs/adapter-auto' // [!code --]

import adapter from '@sveltejs/adapter-node' // [!code ++]

```

:::

You also need to change the dataProvider on the remult initilizer a little bit. By default Sveltekit will try to access your database when running the `npm run build` command, but on railway, the postgres database is not accessible at this time, and it will make your deployment fail.

To solve this, we need to make Sveltekit use the default JSON database when building, and use Postgres only in production.

Make the following changes on your `server/api.ts` file:

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

import { Task } from './shared/Task'

import { TasksController } from './shared/TasksController'

import { createPostgresDataProvider } from 'remult/postgres'

import { DATABASE_URL } from '$env/static/private'

import { building } from '$app/environment'; // [!code ++]

export const api = remultApi({

entities: [Task],

controllers: [TasksController],

dataProvider: DATABASE_URL // [!code --]

? createPostgresDataProvider({ connectionString: DATABASE_URL }) // [!code --]

: undefined, // [!code --]

dataProvider: async () => { // [!code ++]

if (DATABASE_URL && !building) { // [!code ++]

return createPostgresDataProvider({ // [!code ++]

connectionString: DATABASE_URL // [!code ++]

}); // [!code ++]

} // [!code ++]

return undefined; // [!code ++]

}, // [!code ++]

getUser: async (event) => {

const auth = await event?.locals?.auth()

return auth?.user as UserInfo

},

})

```

:::

In order to deploy the todo app to [railway](https://railway.app/) you'll need a `railway` account. You'll also need [Railway CLI](https://docs.railway.app/develop/cli#npm) installed, and you'll need to login to railway from the cli, using `railway login`.

Click enter multiple times to answer all its questions with the default answer

1. Create a Railway `project`.

From the terminal in your project folder run:

```sh

railway init

```

2. Select `Empty Project`

3. Set a project name.

4. Once it's done add a database by running the following command:

```sh

railway add

```

5. Select `postgressql` as the database.

6. You need to modify your package.json file and tell the railway to use the correct version of NodeJS, like this:

```jsonc [package.json]

"type": "module",

"engines": { // [!code ++]

"node": ">=20.19" // [!code ++]

}, // [!code ++]

"scripts": {

// ... your scripts

},

```

8. Once that's done run the following command to upload the project to railway:

```sh

railway up

```

::: warning Note

Due to a bug in the way the default Railway builds, the first time you use the `railway up` command, it will fail to deploy. Continue to follow the steps to fix it

:::

9. Go to the `railway` project's site and click on the project

10. Switch to the `settings` tab

11. Under `Environment` click on `Generate Domain`

12. Under `Build` change the build from the default `Nixpacks` to the `Railpack`

13. Switch to the `variables` tab

14. Click on `+ New Variable`, and in the `VARIABLE_NAME` click `Add Reference` and select `DATABASE_URL`

15. Add another variable called `AUTH_SECRET` and set it to a random string, you can use an [online UUID generator](https://www.uuidgenerator.net/)

16. Wait for railway to finish deploying your changes and Click on the newly generated url to open the app in the browser and you'll see the app live in production. (it may take a few minutes to go live)

::: warning Note

If you run into trouble deploying the app to Railway, try using Railway's [documentation](https://docs.railway.app/deploy/deployments).

:::

That's it - our application is deployed to production, on a node js server

Love Remult? Give our repo a star.⭐

# SvelteKit - Tutorial - Go further / Extra

# SvelteKit

## Create a SvelteKit Project

To create a new SvelteKit project, run the following command:

```sh

npx sv@latest create remult-sveltekit-todo

```

During the setup, answer the prompts as follows:

1. **Which Svelte app template?**: ... `minimal` Project

2. **Add type checking with TypeScript?** ... Yes, using `TypeScript` syntax

3. **Select additional options**: ... We didn't select anything for this tutorial. Feel free to adapt it to your needs.

4. **Which package manager?**: ... We took `npm`, if you perfer others, feel free.

Once the setup is complete, navigate into the project directory:

```sh

cd remult-sveltekit-todo

```

## Install Required Packages and Remult

Install Remult and any necessary dependencies by running:

```sh

npm install remult --save-dev

```

## Bootstrap Remult

To set up Remult in your SvelteKit project:

1. Create your remult `api`

::: code-group

```ts [src/server/api.ts]

import { remultApi } from 'remult/remult-sveltekit'

export const api = remultApi({})

```

:::

2. Create a remult `api route`

::: code-group

```ts [src/routes/api/[...remult]/+server.ts]

import { api } from '../../../server/api'

export const { GET, POST, PUT, DELETE } = api

```

:::

## Final Tweaks

Remult uses TypeScript decorators to enhance classes into entities. To enable decorators in your SvelteKit project, modify the `tsconfig.json` file by adding the following to the `compilerOptions` section:

```json [tsconfig.json]

{

"compilerOptions": {

"experimentalDecorators": true // [!code ++]

}

}

```

## Run the App

To start the development server, run the following command:

```sh

npm run dev

```

Your SvelteKit app will be available at [http://localhost:5173](http://localhost:5173).

Your SvelteKit project with Remult is now up and running.

# Extra

## Extra - Remult in other SvelteKit routes

To enable remult across all sveltekit route

::: code-group

```ts [src/hooks.server.ts]

import { sequence } from '@sveltejs/kit/hooks'

import { api as handleRemult } from './server/api'

export const handle = sequence(

// Manage your sequence of handlers here

handleRemult,

)

```

:::

## Extra - Universal load & SSR

To Use remult in ssr `PageLoad` - this will leverage the `event`'s fetch to load data on the server

without reloading it on the frontend, and abiding to all api rules even when it runs on the server

::: code-group

```ts [src/routes/+page.ts]

import { remult } from 'remult'

import type { PageLoad } from './$types'

export const load = (async (event) => {

// Instruct remult to use the special svelte fetch

// Like this univeral load will work in SSR & CSR

remult.useFetch(event.fetch)

return repo(Task).find()

}) satisfies PageLoad

```

:::

::: tip

You can add this in `+layout.ts` as well and all routes **under** will have the correct fetch out of the box.

:::

## Extra - Server load

If you return a remult entity from the `load` function of a `+page.server.ts`,

SvelteKit will complain and show this error:

```bash

Error: Data returned from `load` while rendering / is not serializable:

Cannot stringify arbitrary non-POJOs (data.tasks[0])

```

To fix this, you can use `repo(Entity).toJson()` in the server load function and `repo(Entity).fromJson()` in the .svelte file

to serialize and deserialize well the entity.

::: code-group

```ts [src/routes/+page.server.ts]

import { repo } from 'remult'

import type { PageServerLoad } from './$types'

import { Task } from '../demo/todo/Task'

export const load = (async () => {

const tasks = repo(Task).toJson(await repo(Task).find())

return {

tasks,

}

}) satisfies PageServerLoad

```

```svelte [src/routes/+page.svelte]

```

:::

---

#### Since `@sveltejs/kit@2.11.0`, there is a new feature: [Universal-hooks-transport](https://svelte.dev/docs/kit/hooks#Universal-hooks-transport)

With this new feature, you can get rid of `repo(Entity).toJson()` and `repo(Entity).fromJson()` thanks to this file: `hooks.ts`.

::: code-group

```ts [src/hooks.ts]

import { repo, type ClassType } from 'remult'

import { Task } from './demo/todo/Task'

import type { Transport } from '@sveltejs/kit'

import { api } from './server/api'

// You can have:

// A/ a local entity array to work only these ones (like here)

// or

// B/ import a global entity array that will be

// shared between backend and frontend (not in ./server/api.ts)

const entities = [Task]

export const transport: Transport = {

remultTransport: {

encode: (value: any) => {

for (let index = 0; index < entities.length; index++) {

const element = entities[index] as ClassType

if (value instanceof element) {

return {

...repo(element).toJson(value),

entity_key: repo(element).metadata.key,

}

}

}

},

decode: (value: any) => {

for (let index = 0; index < entities.length; index++) {

const element = entities[index] as ClassType

if (value.entity_key === repo(element).metadata.key) {

return repo(element).fromJson(value)

}

}

},

},

}

```

```ts [src/routes/+page.server.ts]

import { repo } from 'remult'

import type { PageServerLoad } from './$types'

import { Task } from '../demo/todo/Task'

export const load = (async () => {

// const tasks = repo(Task).toJson(await repo(Task).find()) // [!code --]

const tasks = await repo(Task).find()

return {

tasks,

}

}) satisfies PageServerLoad

```

```svelte [src/routes/+page.svelte]

```

:::

## Extra - Svelte 5 & Reactivity

Remult is fully compatible with Svelte 5, Rune, and Reactivity.

To take full advantage of it, add this snippet:

::: code-group

```html [src/routes/+layout.svelte]

```

:::

Then you can use `$state`, `$derived` like any other places

::: code-group

```html [src/routes/+page.svelte]

```

:::

### Focus on auth reactivity

Anywhere in your frontend code you can set `remult.user = xxx` and all remult auth reactivity will work (remult.user, remult.authenticated(), ...)

```ts

const logout = async () => {

try {

remult.user = await AuthController.signOut()

} catch (error) {

alert(error.message)

}

}

```

If you want `remult.user` to be filled in SSR, here is the code:

::: code-group

```svelte [src/routes/+layout.svelte]

{@render children?.()}

```

```ts [src/routes/+layout.server.ts]

import { remult } from 'remult'

import type { LayoutServerLoad } from './$types'

export const load = (async () => {

return { user: remult.user }

}) satisfies LayoutServerLoad

```

:::

And you can trigger this with :

```ts

import { invalidateAll } from '$app/navigation'

const logout = async () => {

try {

await AuthController.signOut()

invalidateAll() // [!code ++] // This will trigger the layout.server.ts load function

} catch (error) {

alert(error.message)

}

}

```

## Extra - Remote Functions

SvelteKit is introducing a new feature called [Remote Functions](https://svelte.dev/docs/kit/remote-functions). It's early, but we want to see how it integrates with Remult.

Here is the default doc example (zod & drizzle or valibot & prisma, ...):

```ts

import { error } from '@sveltejs/kit'

import { query } from '$app/server'

import * as v from 'valibot' // or zod, ... any standard schema library

import * as db from '$lib/server/database' // with drizzle, prisma, pg, ... any database library

export const getPost = query(

z.string(), // Standard Schema

async (slug) => {

const [post] = await db.sql`SELECT * FROM post WHERE slug = ${slug}`

if (!post) error(404, 'Not found')

return post

},

)

```

With remult library, you have both:

- the database layer

- the standard schema layer

So you can replace the previous code with this:

```ts

import { error } from '@sveltejs/kit'

import { query } from '$app/server'

import { standardSchema, repo } from 'remult'

import { Post } from '$lib/entities'

export const getPost = query(

standardSchema(repo(Post), 'slug'), // Standard Schema

async ({ slug }) => {

const post = await repo(Post).findFirst({ slug })

if (!post) error(404, 'Not found')

return post

},

)

```

We are excited to see how you will use it 🚀

::: tip

More info about [remult standard schema](/docs/standard-schema)

:::

# Next.js - Tutorial - Setup

# Build a Full-Stack Next.js Application

### Create a simple todo app with Remult using Next.js

In this tutorial, we are going to create a simple app to manage a task list. We'll use `Next.js`, and Remult as our full-stack CRUD framework. For deployment to production, we'll use [Vercel](https://vercel.com/) and a `PostgreSQL` database.

By the end of the tutorial, you should have a basic understanding of Remult and how to use it to accelerate and simplify full stack app development.

### Prerequisites

This tutorial assumes you are familiar with `TypeScript`, `React` and `Next.js`.

Before you begin, make sure you have [Node.js](https://nodejs.org) and [git](https://git-scm.com/) installed.

# Setup for the Tutorial

This tutorial requires setting up a Next.js project and a few lines of code to add Remult.

You can either **use a starter project** to speed things up, or go through the **step-by-step setup**.

## Option 1: Clone the Starter Project

1. Clone the _remult-nextjs-todo_ repository from GitHub and install its dependencies.

```sh

git clone https://github.com/remult/nextjs-app-starter.git remult-nextjs-todo

cd remult-nextjs-todo

npm install

```

2. Open your IDE.

3. Open a terminal and run the `dev` npm script.

```sh

npm run dev

```

The default Next.js app main screen should be displayed (except for the styles which were modified for the tutorial).

At this point, our starter project is up and running. We are now ready to move to the [next step of the tutorial](./entities.md) and start creating the task list app.

## Option 2: Step-by-step Setup

### Create a Next.js project

1. Create the new Next.js project.

```sh

npx -y create-next-app@latest remult-nextjs-todo

```

Answer the questions as follows:

```sh

✔ Would you like to use TypeScript? ... Yes

✔ Would you like to use ESLint? ... No

✔ Would you like to use Tailwind CSS? ... No

✔ Would you like to use `src/` directory? ... Yes

✔ Would you like to use App Router? (recommended) ... Yes

✔ Would you like to customize the default import alias? ... No

```

2. Go to the created folder.

```sh

cd remult-nextjs-todo

```

### Install Remult

```sh

npm i remult

```

### Bootstrap Remult in the back-end

Remult is bootstrapped in a `Next.js` using a [catch all dynamic API route](https://nextjs.org/docs/app/building-your-application/routing/dynamic-routes#catch-all-segments), that passes the handling of requests to an object created using the `remultApi` function.

1. Open your IDE.

2. In the `src` directory, create a file called `api.ts` with the following code:

```ts

// src/api.ts

import { remultApi } from 'remult/remult-next'

export const api = remultApi({})

```

3. Create an `api` directory within the app folder, and inside it, create a `[...remult]` subdirectory. Inside the `app/api/[...remult]` directory, craft a `route.ts` file with the following code. This file functions as a catch all route for the Next.js API route, effectively managing all incoming API requests.

```ts

// src/app/api/[...remult]/route.ts

import { api } from '../../../api'

export const { POST, PUT, DELETE, GET } = api

```

### Enable TypeScript decorators

Add the following entry to the `compilerOptions` section of the `tsconfig.json` file to enable the use of decorators in the React app.

```json{7}

// tsconfig.json

{

...

"compilerOptions": {

...

"experimentalDecorators": true // add this

...

}

...

}

```

### Run the app

Open a terminal and start the app.

```sh

npm run dev

```

The default `Next.js` main screen should be displayed.

### Remove Next.js default styles

The Next.js default styles won't fit our todo app. If you'd like a nice-looking app, replace the contents of `app/globals.css` with [this CSS file](https://raw.githubusercontent.com/remult/nextjs-app-starter/main/src/app/globals.css). Otherwise, you can simply **delete the contents of `app/globals.css`**.

### Setup completed

At this point, our starter project is up and running. We are now ready to move to the [next step of the tutorial](./entities.md) and start creating the task list app.

# Next.js - Tutorial - Entities

# Entities

Let's start coding the app by defining the `Task` entity class.

The `Task` entity class will be used:

- As a model class for client-side code

- As a model class for server-side code

- By `remult` to generate API endpoints, API queries, and database commands

The `Task` entity class we're creating will have an auto-generated `id` field, a `title` field, a `completed` field and an auto-generated `createdAt` field. The entity's API route ("tasks") will include endpoints for all `CRUD` operations.

## Define the Model

1. Create a `shared` folder under the `src` folder. This folder will contain code shared between frontend and backend.

2. Create a file `Task.ts` in the `shared/` folder, with the following code:

```ts

// src/shared/Task.ts

import { Entity, Fields } from 'remult'

@Entity('tasks', {

allowApiCrud: true,

})

export class Task {

@Fields.id()

id = ''

@Fields.string()

title = ''

@Fields.boolean()

completed = false

@Fields.createdAt()

createdAt?: Date

}

```

3. In the `src/api.ts` api route, register the `Task` entity with Remult by adding `entities: [Task]` to an `options` object you pass to the `remultApi()` function:

```ts{4,7}

// src/api.ts

import { remultApi } from "remult/remult-next"

import { Task } from "./shared/Task"

const api = remultApi({

entities: [Task]

})

export const { POST, PUT, DELETE, GET } = api

```

The [@Entity](../../docs/ref_entity.md) decorator tells Remult this class is an entity class. The decorator accepts a `key` argument (used to name the API route and as a default database collection/table name), and an `options` argument used to define entity-related properties and operations, discussed in the next sections of this tutorial.

To initially allow all CRUD operations for tasks, we set the option [allowApiCrud](../../docs/ref_entity.md#allowapicrud) to `true`.

The [@Fields.id](../../docs/field-types.md#fields-id) decorator tells Remult to automatically generate a unique id using `crypto.randomUUID()`. This value can't be changed after the entity is created.

The [@Fields.string](../../docs/field-types.md#fields-string) decorator tells Remult the `title` property is an entity data field of type `String`. This decorator is also used to define field-related properties and operations, discussed in the next sections of this tutorial and the same goes for `@Fields.boolean` and the `completed` property.

The [@Fields.createdAt](../../docs/field-types.md#fields-createdat) decorator tells Remult to automatically generate a `createdAt` field with the current date and time.

::: tip

For a complete list of supported field types, see the [Field Types](../../docs/field-types.md) section in the Remult documentation.

:::

## Test the API

Now that the `Task` entity is defined, we can start using the REST API to query and add a tasks.

1. Open a browser with the url: [http://localhost:3000/api/tasks](http://localhost:3000/api/tasks), and you'll see that you get an empty array.

2. Use `curl` to `POST` a new task - _Clean car_.

```sh

curl http://localhost:3000/api/tasks -d "{\"title\": \"Clean car\"}" -H "Content-Type: application/json"

```

3. Refresh the browser for the url: [http://localhost:3000/api/tasks](http://localhost:3000/api/tasks) and see that the array now contains one item.

4. Use `curl` to `POST` a few more tasks:

```sh

curl http://localhost:3000/api/tasks -d "[{\"title\": \"Read a book\"},{\"title\": \"Take a nap\", \"completed\":true },{\"title\": \"Pay bills\"},{\"title\": \"Do laundry\"}]" -H "Content-Type: application/json"

```

- Note that the `POST` endpoint can accept a single `Task` or an array of `Task`s.

5. Refresh the browser again, to see that the tasks were stored in the db.

::: warning Wait, where is the backend database?

While remult supports [many relational and non-relational databases](https://remult.dev/docs/installation/database/), in this tutorial we start by storing entity data in a backend **JSON file**. Notice that a `db` folder has been created under the root folder, with a `tasks.json` file containing the created tasks.

:::

## Admin UI

### Enabling the Admin UI

Add the Admin UI to your Next.js application by setting the `admin` option to `true` in the `remultApi()`

::: code-group

```ts [src/api.ts]

import { remultApi } from 'remult/remult-next'

import { Task } from './shared/Task'

const api = remultApi({

entities: [Task],

admin: true,

})

export const { POST, PUT, DELETE, GET } = api

```

:::

### Accessing and Using the Admin UI

Navigate to `http://localhost:3000/api/admin` to access the Admin UI. Here, you can perform CRUD operations on your entities, view their relationships via the Diagram entry, and ensure secure management with the same validations and authorizations as your application.

### Features

- **CRUD Operations**: Directly create, update, and delete tasks through the Admin UI.

- **Entity Diagram**: Visualize relationships between entities for better data structure understanding.

- **Security**: Operations are secure, adhering to application-defined rules.

## Display the Task List

Let's start developing the web app by displaying the list of existing tasks in a React component.

In the `src` folder create a `components` folder and in it create a `todo.tsx` file and place the following code in it:

```tsx

// src/components/todo.tsx

'use client'

import { useEffect, useState } from 'react'

import { remult } from 'remult'

import { Task } from '../shared/Task'

const taskRepo = remult.repo(Task)

export default function Todo() {

const [tasks, setTasks] = useState([])

useEffect(() => {

taskRepo.find().then(setTasks)

}, [])

return (

)

}

```

Here's a quick overview of the different parts of the code snippet:

- `taskRepo` is a Remult [Repository](../../docs/ref_repository.md) object used to fetch and create Task entity objects.

- `tasks` is a Task array React state to hold the list of tasks.

- React's useEffect hook is used to call the Remult [repository](../../docs/ref_repository.md)'s [find](../../docs/ref_repository.md#find) method to fetch tasks from the server once when the React component is loaded.

### Display the todo Component

Replace the contents of `src/app/page.tsx` with the following code:

```tsx

// src/app/page.tsx

import Todo from '../components/todo'

export default function Home() {

return

}

```

After the browser refreshes, the list of tasks appears.

# Next.js - Tutorial - Paging, Sorting and Filtering

# Paging, Sorting and Filtering

The RESTful API created by Remult supports **server-side paging, sorting, and filtering**. Let's use that to limit, sort and filter the list of tasks.

## Limit Number of Fetched Tasks

Since our database may eventually contain a lot of tasks, it make sense to use a **paging strategy** to limit the number of tasks retrieved in a single fetch from the back-end database.

Let's limit the number of fetched tasks to `20`.

In the `useEffect` hook defined in the `Todo` component, pass an `options` argument to the `find` method call and set its `limit` property to 20.

```ts{9-13}

// src/components/todo.tsx

//...

export default function Todo() {

//...

useEffect(() => {

taskRepo

.find({

limit: 20

})

.then(setTasks)

}, [])

//...

}

```

There aren't enough tasks in the database for this change to have an immediate effect, but it will have one later on when we'll add more tasks.

::: tip

To query subsequent pages, use the [Repository.find()](../../docs/ref_repository.md#find) method's `page` option.

:::

## Sorting By Creation Date

We would like old tasks to appear first in the list, and new tasks to appear last. Let's sort the tasks by their `createdAt` field.

In the `useEffect` hook, set the `orderBy` property of the `find` method call's `option` argument to an object that contains the fields you want to sort by.

Use "asc" and "desc" to determine the sort order.

```ts{7}

// src/components/todo.tsx

useEffect(() => {

taskRepo

.find({

limit: 20,

orderBy: { createdAt: "asc" }

})

.then(setTasks)

}, [])

```

## Server Side Filtering

Remult supports sending filter rules to the server to query only the tasks that we need.

Adjust the `useEffect` hook to fetch only `completed` tasks.

```ts{8}

// src/components/todo.tsx

useEffect(() => {

taskRepo

.find({

limit: 20,

orderBy: { createdAt: "asc" },

where: { completed: true }

})

.then(setTasks)

}, [])

```

::: warning Note

Because the `completed` field is of type `boolean`, the argument is **compile-time checked to be of the `boolean` type**. Setting the `completed` filter to `undefined` causes it to be ignored by Remult.

:::

Play with different filtering values, and eventually comment it out, since we do need all the tasks

```ts{6}

useEffect(() => {

taskRepo

.find({

limit: 20,

orderBy: { createdAt: "asc" },

//where: { completed: true },

})

.then(setTasks);

}, []);

```

::: tip Learn more

Explore the reference for a [comprehensive list of filtering options](../../docs/entityFilter.md).

:::

# Next.js - Tutorial - CRUD Operations

# CRUD Operations

## Adding new tasks

Now that we can see the list of tasks, it's time to add a few more.

Add the highlighted `newTaskTitle` state and `addTask` function the Home Component

```ts{5-16}

// src/components/todo.tsx

export default function Todo() {

const [tasks, setTasks] = useState([])

const [newTaskTitle, setNewTaskTitle] = useState("")

const addTask = async (e: FormEvent) => {

e.preventDefault()

try {

const newTask = await taskRepo.insert({ title: newTaskTitle })

setTasks([...tasks, newTask])

setNewTaskTitle("")

} catch (error: unknown) {

alert((error as { message: string }).message)

}

}

//...

```

- the call to `taskRepo.insert` will make a post request to the server, insert the new task to the `db`, and return the new `Task` object with all it's info (including the id generated by the database)

::: warning Import FormEvent

This code requires adding an import of `FormEvent` from `react`.

:::

Next let's adjust the `tsx` to display a form to add new tasks

```tsx{7-14}

// src/components/todo.tsx

return (

)

```

Try adding a few tasks to see how it works

## Mark Tasks as completed

Modify the contents of the `tasks.map` iteration within the `Todo` component to include the following `setCompleted` function and call it in the input's `onChange` event.

```tsx{5-6,8-9,16}

// src/components/todo.tsx

{

tasks.map(task => {

const setTask = (value: Task) =>

setTasks(tasks => tasks.map(t => (t === task ? value : t)))

const setCompleted = async (completed: boolean) =>